SiFive Blog

The latest insights, and deeper technology dives, from RISC-V leaders

RISC-V Vector Extension Intrinsic Support

The RISC-V Vector extension (RVV) enables processor cores based on the RISC-V instruction set architecture to process data arrays, alongside traditional scalar operations to accelerate the computation of single instruction streams on large data sets.

The RISC-V International vector working group is composed of experts from industry and academia, to create a standard extension that can be ratified for general adoption among any who choose to adopt RVV.

We’re incredibly pleased to announce that a team from SiFive and the Barcelona Supercomputing Center have collaborated to create a new API to support RISC-V Vector Intrinsics in popular compilers, GCC and LLVM. The API is available now on GitHub, here.

As RISC-V continues to work towards ratification of the final version of the RISC-V Vector Extension, support will continue to be updated and added to create an industry-wide tool chain to enable widespread adoption of vector processing based on RISC-V.

We have implemented the majority of the API in GCC to validate the currently proposed 1.0 specification. In addition to the RISC-V Vector specification, we have also implemented support for scalar and vector FP16 (Zfh), atomics (Zvamo), and segmented load/store (Zvlsseg) intrinsics. Full support of the currently proposed 1.0 specification has been implemented in the assembler.

The team has integrated RVV intrinsics into risc-gnu-toolchain, so you can build the intrinsic enabled GNU toolchain as usual. We’ve also verified using our internal test suite and a few internal projects. We have extensively tested the intrinsics and our teams have used the intrinsics to implement different vector algorithms to help validate the vector implementation.

In the near future, RISC-V Vector intrinsics will be implemented in the LLVM compiler and the work will be submitted upstream. This announcement also serves as a call-to-arms for the RISC-V community, to review and assist as we update the current implementation to the latest RISC-V Vector 1.0 draft specification.

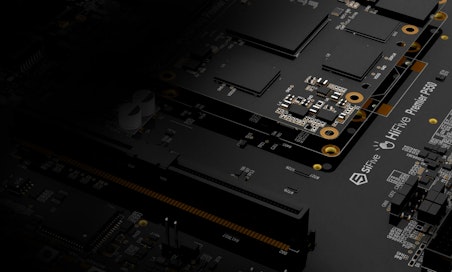

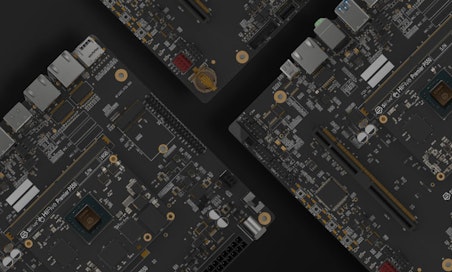

The hardware and software teams at SiFive are hard at work on SiFive Intelligence, our range of processor IP featuring RISC-V cores with vector capabilities. We’ll have more details on these cores later this year. Until then, we’re excited for the progress being made on building the ecosystem for converged processor cores that include scalable vector capabilities, to help design the domain-specific computing solutions needed for today’s computing challenges.

Domain-specific architectures based on open standards enable deeper levels of performance, efficiency, and vertical integration to provide differentiated computing solutions. The focus of SiFive IP business unit is creating incredible processor core architectures that take a step forward from general purpose CPUs and GPUs housed together in a system, to a deeper level of compute convergence. The industry-wide interest and support for RISC-V Vector extensions is a great indicator of the potential of SiFive Intelligence.